(中文) 技术双周报 | 任务栏支持锁定 + 内核性能调优,这波优化很实用!

17 June, 2025 08:21AM by xiaofei

17 June, 2025 08:21AM by xiaofei

Canonical, the publisher of Ubuntu and trusted open source solutions provider, is proud to sponsor HPE Discover Las Vegas 2025. Join us from June 23–26 to explore how our collaboration with Hewlett Packard Enterprise (HPE) is transforming the future of enterprise IT, from virtualization and cloud infrastructure to AI/ML workloads.

Register to HPE Discover Las Vegas 2025

Stop by our booth to engage with our team and get a closer look at our latest innovations. Here’s what’s in store:

Visit our booth and attend sessions led by industry experts covering a range of open source solutions. Plus, all attendees will receive a special gift!

Discover how to gain control over your infrastructure, optimize costs, and automate operations while building a flexible, secure foundation that scales seamlessly with your business growth – whether integrated into your GreenLake multi-cloud strategy or deployed as a standalone private cloud.

From Kubeflow for MLOps to Charmed Kubernetes for orchestration. See how open source AI infrastructure drives innovation while reducing complexity and costs.

Learn how Ubuntu powers HPE VM Essentials to deliver the simplicity, security, and scalability your business demands – making enterprise virtualization accessible to organizations of every size.

As a strategic partner of HPE and a member of the HPE Technology Partner Program, Canonical brings decades of open source innovations to enterprise-grade solutions. Together, we deliver a full-stack experience — with integrated, secure, and cost-effective platforms that scale with your business.

Through our joint collaboration, organizations gain:

Know more about our offerings and how Canonical and HPE can propel your business forward.

Want to see more?

Stop by the booth #2235 to speak to our experts.

Are you interested in setting up a meeting with our team?

Reach out to our Alliance Business Director:

Valerie Noto – valerie.noto@canonical.com

Welcome back! If you've been following our PureOS Crimson milestones, you'll see that the few remaining tasks relate to providing ready-to-flash images for the Librem 5.

The post PureOS Crimson Development Report: May 2025 appeared first on Purism.

16 June, 2025 04:26PM by Purism

In a recent interview republished by Yahoo Finance, Purism CEO Todd Weaver explained why the Liberty Phone, Purism’s secure made in the USA smartphone, is exempt from U.S. tariffs targeting smartphones manufactured in China—such as Apple’s iPhone.

The post Purism Liberty Phone free from tariffs, as reported by Yahoo Finance appeared first on Purism.

16 June, 2025 04:03PM by Purism

The Internet has changed a lot in the last 40+ years. Fads have come and gone. Network protocols have been designed, deployed, adopted, and abandoned. Industries have come and gone. The types of people on the internet have changed a lot. The number of people on the internet has changed a lot, creating an information medium unlike anything ever seen before in human history. There’s a lot of good things about the Internet as of 2025, but there’s also an inescapable hole in what it used to be, for me.

I miss being able to throw a site up to send around to friends to play with without worrying about hordes of AI-feeding HTML combine harvesters DoS-ing my website, costing me thousands in network transfer for the privilege. I miss being able to put a lightly authenticated game server up and not worry too much at night – wondering if that process is now mining bitcoin. I miss being able to run a server in my home closet. Decades of cat and mouse games have rendered running a mail server nearly impossible. Those who are “brave” enough to try are met with weekslong stretches of delivery failures and countless hours yelling ineffectually into a pipe that leads from the cheerful lobby of some disinterested corporation directly into a void somewhere 4 layers below ground level.

I miss the spirit of curiosity, exploration, and trying new things. I miss building things for fun without having to worry about being too successful, after which “security” offices start demanding my supplier paperwork in triplicate as heartfelt thanks from their engineering teams. I miss communities that are run because it is important to them, not for ad revenue. I miss community operated spaces and having more than four websites that are all full of nothing except screenshots of each other.

Every other page I find myself on now has an AI generated click-bait title, shared for rage-clicks all brought-to-you-by-our-sponsors–completely covered wall-to-wall with popup modals, telling me how much they respect my privacy, with the real content hidden at the bottom bracketed by deceptive ads served by companies that definitely know which new coffee shop I went to last month.

This is wrong, and those who have seen what was know it.

I can’t keep doing it. I’m not doing it any more. I reject the notion that this is as it needs to be. It is wrong. The hole left in what the Internet used to be must be filled. I will fill it.

Throughout the 2000s, some of my favorite memories were from LAN parties at my friends’ places. Dragging your setup somewhere, long nights playing games, goofing off, even building software all night to get something working—being able to do something fiercely technical in the context of a uniquely social activity. It wasn’t really much about the games or the projects—it was an excuse to spend time together, just hanging out. A huge reason I learned so much in college was that campus was a non-stop LAN party – we could freely stand up servers, talk between dorms on the LAN, and hit my dorm room computer from the lab. Things could go from individual to social in the matter of seconds. The Internet used to work this way—my dorm had public IPs handed out by DHCP, and my workstation could serve traffic from anywhere on the internet. I haven’t been back to campus in a few years, but I’d be surprised if this were still the case.

In December of 2021, three of us got together and connected our houses together in what we now call The Promised LAN. The idea is simple—fill the hole we feel is gone from our lives. Build our own always-on 24/7 nonstop LAN party. Build a space that is intrinsically social, even though we’re doing technical things. We can freely host insecure game servers or one-off side projects without worrying about what someone will do with it.

Over the years, it’s evolved very slowly—we haven’t pulled any all-nighters. Our mantra has become “old growth”, building each layer carefully. As of May 2025, the LAN is now 19 friends running around 25 network segments. Those 25 networks are connected to 3 backbone nodes, exchanging routes and IP traffic for the LAN. We refer to the set of backbone operators as “The Bureau of LAN Management”. Combined decades of operating critical infrastructure has driven The Bureau to make a set of well-understood, boring, predictable, interoperable and easily debuggable decisions to make this all happen. Nothing here is exotic or even technically interesting.

The hardest part, however, is rejecting the idea that anything outside our own LAN is untrustworthy—nearly irreversible damage inflicted on us by the Internet. We have solved this by not solving it. We strictly control membership—the absolute hard minimum for joining the LAN requires 10 years of friendship with at least one member of the Bureau, with another 10 years of friendship planned. Members of the LAN can veto new members even if all other criteria is met. Even with those strict rules, there’s no shortage of friends that meet the qualifications—but we are not equipped to take that many folks on. It’s hard to join—-both socially and technically. Doing something malicious on the LAN requires a lot of highly technical effort upfront, and it would endanger a decade of friendship. We have relied on those human, social, interpersonal bonds to bring us all together. It’s worked for the last 4 years, and it should continue working until we think of something better.

We assume roommates, partners, kids, and visitors all have access to The Promised LAN. If they’re let into our friends' network, there is a level of trust that works transitively for us—I trust them to be on mine. This LAN is not for “security”, rather, the network border is a social one. Benign “hacking”—in the original sense of misusing systems to do fun and interesting things—is encouraged. Robust ACLs and firewalls on the LAN are, by definition, an interpersonal—not technical—failure. We all trust every other network operator to run their segment in a way that aligns with our collective values and norms.

Over the last 4 years, we’ve grown our own culture and fads—around half of the people on the LAN have thermal receipt printers with open access, for printing out quips or jokes on each other’s counters. It’s incredible how much network transport and a trusting culture gets you—there’s a 3-node IRC network, exotic hardware to gawk at, radios galore, a NAS storage swap, LAN only email, and even a SIP phone network of “redphones”.

We do not wish to, nor will we, rebuild the internet. We do not wish to, nor will we, scale this. We will never be friends with enough people, as hard as we may try. Participation hinges on us all having fun. As a result, membership will never be open, and we will never have enough connected LANs to deal with the technical and social problems that start to happen with scale. This is a feature, not a bug.

This is a call for you to do the same. Build your own LAN. Connect it with friends’ homes. Remember what is missing from your life, and fill it in. Use software you know how to operate and get it running. Build slowly. Build your community. Do it with joy. Remember how we got here. Rebuild a community space that doesn’t need to be mediated by faceless corporations and ad revenue. Build something sustainable that brings you joy. Rebuild something you use daily.

Bring back what we’re missing.

16 June, 2025 12:55PM by Joseph Lee

This week’s Armbian updates focused on kernel improvements, bootloader modernization, and several core enhancements to the build infrastructure. Key work spanned platforms like Rockchip, Sunxi, and Odroid, emphasizing kernel stability and broader compatibility across boards.

Several boards received kernel updates:

Patches also landed to adapt Wi-Fi drivers to 6.15-era changes, including fixes for xradio and uwe5622 on Sunxi, contributed by The-going:

Improvements were made to bootloader support:

Several build system enhancements landed this cycle:

PR #8259 and commit cdf71df by djurny expanded DHCP configuration in netplan to automatically include interfaces matching lan* and wan*, simplifying initial setup across devices.

The post Armbian Development Highlights: June 2–9, 2025 first appeared on Armbian.

12 June, 2025 10:16PM by Michael Robinson

Choosing an Application Delivery Controller (ADC) is not just about ticking boxes. It’s about making sure your infrastructure is prepared to deliver fast, secure, and resilient applications—without overengineering or overspending. From availability to security and automation, the ADC sits at the core of how your service behaves under pressure.

In this guide, we’ll walk through the key criteria that define a capable ADC, explaining not just what to look for, but why each factor matters.

An ADC should grow with your business—not become a bottleneck.

When evaluating scalability, don’t just ask “how much traffic can it handle?” Consider how performance evolves as demand increases. Can the ADC handle thousands of concurrent sessions, millions of requests per second, or high-throughput SSL traffic without introducing latency?

You’ll also want to know:

An Application Delivery Controller that scales poorly can turn traffic spikes into outages. One that scales well can become the foundation for future growth.

Look for: high throughput (Gbps), connection-per-second capacity, clustering support, autoscaling capabilities.

Not all ADCs offer the same traffic management logic. Some only offer basic Layer 4 balancing (based on IP or port), while others support Layer 7 intelligence (routing based on URLs, cookies, headers…).

Layer 7 capabilities are especially useful for:

Application awareness also includes dynamic health checks to avoid sending users to unhealthy servers.

Look for: Layer 4 and 7 balancing, persistence options, content-based routing, health checks, SSL offloading.

Modern ADCs are not just traffic routers—they’re the front line of your application security.

A solid ADC should include:

The key here is native integration. In many platforms, these are added as extra modules—sometimes from third-party vendors—making management more complex and pricing less predictable.

Look for: integrated WAF, rule customization, L7 DDoS protection, bot mitigation, API traffic control.

Your ADC should adapt to your infrastructure—not the other way around.

Whether you’re running on-premises, in the cloud, or in a hybrid setup, the ADC must support a variety of deployment methods:

Some vendors tie features or form factors to licensing restrictions—make sure the platform you choose works where you need it to.

Look for: multiple deployment formats, public cloud compatibility, support for major hypervisors.

Even the best features become frustrating if they’re hard to manage.

Many ADCs suffer from steep learning curves, non-intuitive UIs, or missing automation options. Worse, some make their visual consoles or monitoring tools part of separate, paid modules—meaning essential functions like traffic monitoring or cert management come at an extra cost.

You want:

Look for: REST+JSON API, full-featured web console, real-time traffic visibility, external integrations.

For industries like finance, healthcare, or eCommerce, security compliance is not optional.

ADC encryption handling must meet modern standards, including:

The ADC should simplify compliance, not add to the operational burden.

Look for: automatic certificate renewal (e.g., via Let’s Encrypt), strong encryption policies, compliance certification.

This is where many ADC vendors fall short. What looks like a complete product often turns out to be a basic package—missing essential features unless you purchase additional modules or licenses.

This modular approach makes it hard to estimate the actual cost of the solution over time. It also complicates procurement and makes pricing comparisons difficult, as vendors vary based on throughput, users, features, and support tiers.

Support is another critical pain point.

Many ADC vendors outsource their support to general helpdesk services operating through ticketing systems. The first-line staff often lack deep technical knowledge of the product, and response times can be slow—even for urgent issues. This doesn’t just delay resolution; it puts service continuity and customer trust at risk.

Look for: all-inclusive pricing models, included updates, fast SLA response, expert technical support.

SKUDONET Enterprise Edition is built for companies that want full control over application performance and security—without hidden costs or overcomplicated licensing.

Try SKUDONET Enterprise Edition free for 30 days and explore how a real ADC should work.

12 June, 2025 06:04AM by Nieves Álvarez

Ainda a braços com livros electrónicos e cachuchos espertos, o Miguel e o Diogo dão belas lições sobre como Reduzir, Reutilizar e Reciclar que envolvem passarinhos e ovos estrelados; dizem mal do Windows 11 e como dizer adeus ao Windows 10 da melhor maneira - e ainda têm tempo, entre reuniões muito LoCo, para fazerem rebentar a última bomba da Canonical - que está a dar prémios! - mas também envolve deixar X.org para trás na berma da estrada. Depois revimos as novidades sobre cimeiras variadas, datas novas para as agendas e o que podemos esperar das novas versões de Ubuntu Touch e Questing Cueca (é assim que se diz, não é…?).

Já sabem: oiçam, subscrevam e partilhem!

Este episódio foi produzido por Diogo Constantino, Miguel e Tiago Carrondo e editado pelo Senhor Podcast. O website é produzido por Tiago Carrondo e o código aberto está licenciado nos termos da Licença MIT. (https://creativecommons.org/licenses/by/4.0/). A música do genérico é: “Won’t see it comin’ (Feat Aequality & N’sorte d’autruche)”, por Alpha Hydrae e está licenciada nos termos da CC0 1.0 Universal License. Os efeitos sonoros deste episódio possuem as seguintes licenças: Risos de piadas secas; patrons laughing.mp3 by pbrproductions – https://freesound.org/s/418831/ – License: Attribution 3.0; Trombone: wah wah sad trombone.wav by kirbydx – https://freesound.org/s/175409/ – License: Creative Commons 0; Quem ganhou? 01 WINNER.mp3 by jordanielmills – https://freesound.org/s/167535/ – License: Creative Commons 0; Isto é um Alerta Ubuntu: Breaking news intro music by humanoide9000 – https://freesound.org/s/760770/ – License: Attribution 4.0. Este episódio e a imagem utilizada estão licenciados nos termos da licença: Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0), cujo texto integral pode ser lido aqui. Estamos abertos a licenciar para permitir outros tipos de utilização, contactem-nos para validação e autorização. A arte de episódio foi criada por encomenda pela Shizamura - artista, ilustradora e autora de BD. Podem ficar a conhecer melhor a Shizamura na Ciberlândia e no seu sítio web.

Apple has introduced a new open-source Swift framework named Containerization, designed to fundamentally reshape how Linux containers are run on macOS. In a detailed presentation, Apple revealed a new architecture that prioritizes security, privacy, and performance, moving away from traditional methods to offer a more integrated and efficient experience for developers.

The new framework aims to provide each container with the same level of robust isolation previously reserved for large, monolithic virtual machines, but with the speed and efficiency of a lightweight solution.

Here is the video:

libc implementation, increasing the attack surface and requiring constant updates.The Containerization framework was built with three core goals to address these challenges:

Containerization is more than just an API; it’s a complete rethinking of the container runtime on macOS.

The most significant architectural shift is that each container runs inside its own dedicated, lightweight virtual machine. This approach provides profound benefits:

EXT4. Apple has even developed a Swift package to manage the creation and population of these EXT4 filesystems directly from macOS.vminitd: The Swift-Powered Heart of the ContainerOnce a VM starts, a minimal initial process called vminitd takes over. This is not a standard Linux init system; it’s a custom-built solution with remarkable characteristics:

vminitd is written entirely in Swift and runs as the first process inside the VM.vminitd runs in is barebones. It contains no core utilities (like ls, cp), no dynamic libraries, and no libc implementation.vminitd is cross-compiled from a Mac into a single, static Linux executable. This is achieved using Swift’s Static Linux SDK and musl, a libc implementation optimized for static linking.vminitd is responsible for setting up the entire container environment, including assigning IP addresses, mounting the container’s filesystem, and supervising all processes that run within the container.

container Command-Line ToolTo showcase the power of the framework, Apple has also released an open-source command-line tool simply called container. This tool allows developers to immediately begin working with Linux containers in this new, secure environment.

container image pull alpine:latestcontainer run -ti alpine:latest shWithin milliseconds, the user is dropped into a shell running inside a fully isolated Linux environment. Running the ps aux command from within the container reveals only the shell process and the ps process itself, a clear testament to the powerful process isolation at work.

Both the Containerization framework and the container tool are available on GitHub. Apple is inviting developers to explore the source code, integrate the framework into their own projects, and contribute to its future by submitting issues and pull requests.

This move signals a strong commitment from Apple to making macOS a first-class platform for modern, Linux container-based development, offering a solution that is uniquely secure, private, and performant.

Source:

The post Apple Unveils “Containerization” for macOS: A New Era for Linux Containers on macOS appeared first on Utappia.

KDE Mascot

KDE MascotRelease notes: https://kde.org/announcements/gear/25.04.2/

Now available in the snap store!

Along with that, I have fixed some outstanding bugs:

Ark: now can open/save files in removable media

Kasts: Once again has sound

WIP: Updating Qt6 to 6.9 and frameworks to 6.14

Enjoy everyone!

Unlike our software, life is not free. Please consider a donation, thanks!

To ease the path of enterprise AI adoption and accelerate the conversion of AI insights into business value, NVIDIA recently published the NVIDIA Enterprise AI Factory validated design, an ecosystem of solutions that integrates seamlessly with enterprise systems, data sources, and security infrastructure. The NVIDIA templates for hardware and software design are tailored for modern AI projects, including Physical AI & HPC with a focus on agentic AI workloads.

Canonical is proud to be included in the NVIDIA Enterprise AI Factory validated design. Canonical Kubernetes orchestration supports the process of efficiently building, deploying, and managing a diverse and evolving suite of AI agents on high-performance infrastructure. The Ubuntu operating system is at the heart of NVIDIA Certified Systems across OEM partnerships like Dell. Canonical also collaborates with NVIDIA to ensure the stability and security of open-source software across AI Factory by securing agentic AI dependencies within NVIDIA’s artifact repository.

Canonical’s focus on open source, model-driven operations and ease of use offers enterprises flexible options for building their AI Factory on NVIDIA-accelerated infrastructure.

Canonical Kubernetes is a securely designed and supported foundational platform. It unifies the management of a complex stack – including NVIDIA AI Enterprise, storage, networking, and observability tools – onto a single platform.

Within the NVIDIA Enterprise AI Factory validated design, Kubernetes is used to independently develop, update, and scale microservice-based agents, coupled with automated CI/CD pipelines. Kubernetes also handles the significant and often burstable compute demands for training AI models and scales inference services for deployed agents based on real-time needs.

Based on upstream Kubernetes, Canonical Kubernetes is integrated with the NVIDIA GPU and Networking Operators to leverage NVIDIA hardware acceleration and supports the deployment of NVIDIA AI Enterprise, enabling AI workloads with NVIDIA NIM and accelerated libraries.

Canonical Kubernetes provides full-lifecycle automation and has long-term support with recently announced 12-year security maintenance.

Ubuntu is the most widely used operating system for AI workloads. Choosing Ubuntu as the base OS for NVIDIA AI Factory gives organizations a trusted repository for all their open source, not just the OS. With Ubuntu Pro, customers get up to 12 years of security maintenance for thousands of open source packages including the most widely used libraries and toolchains, like Python, R and others. Organizations can complement that with Canonical’s Container Build Service to get custom containers built to spec, and security maintenance for their entire open source dependency tree.

To learn more about what the NVIDIA Enterprise AI Factory validated design could do for you, get in touch with our team – we’d love to hear about your project.

Visit us at our booth E03 at NVIDIA GTC Paris on June 11-12 for an in-person conversation about how NVIDIA Enterprise AI Factory validated designs.

The combined solutions simplify infrastructure operations and accelerate time-to-value for AI, telecom, and enterprise computing workloads.

At GTC Paris today, Canonical announced support for the NVIDIA DOCA Platform Framework (DPF) with Canonical Kubernetes LTS. This milestone strengthens the strategic collaboration between the two companies and brings the benefits of NVIDIA BlueField DPU accelerations to cloud-native environments with end-to-end automation, open-source flexibility, and long-term support.

DPF is NVIDIA’s software framework for managing and orchestrating NVIDIA BlueField DPUs at scale. NVIDIA BlueFieldenables advanced offloading of infrastructure services (such as networking, storage, and security) directly onto the DPU, freeing up host CPU resources and enabling secure, high-performance, zero-trust architectures. With Canonical Kubernetes now officially supporting DPF 25.1, developers and infrastructure teams can easily integrate these capabilities into their AI, telecom and enterprise computing workloads using Canonical’s proven tooling and automation stack.

This integration enables organizations to deploy DPU-accelerated infrastructure across telco, enterprise, and edge use cases, with key benefits including:

“This milestone marks a significant step forward in our collaboration with NVIDIA,” said Cedric Gegout, VP of Product at Canonical. “Together, we’re enabling a new class of infrastructure that combines the power of NVIDIA BlueField DPUs with the flexibility and automation of Canonical Kubernetes – laying the groundwork for secure, high-performance environments across AI, telecom, and enterprise use cases.”

When combined, the NVIDIA DOCA software framework and Canonical Kubernetes empower cloud architects and platform engineers to design scalable, secure infrastructure while minimizing operational complexity. For DevOps and SRE teams, the integration of BlueField-accelerated services into CI/CD pipelines becomes streamlined, enabling consistent, automated delivery of infrastructure components. Application developers gain access to offloaded services through the DOCA SDK and APIs, accelerating innovation without compromising performance. Meanwhile, IT decision makers benefit from enhanced efficiency, workload isolation, and built-in compliance as they modernize their infrastructure.

Open source and cloud-native communities now have a powerful foundation to build on with Canonical Kubernetes and NVIDIA DPF. This integration enables contributors, researchers, and ecosystem partners to adopt and extend a truly open DPU architecture – one where offloaded networking, security, and observability services run independently of the host CPU. By leveraging DOCA’s modular approach and Canonical’s fully supported Kubernetes stack, developers can co-create a rich ecosystem of BlueField-accelerated functions that complement and enhance the performance, scalability, and resilience of applications running on the main CPU.

Canonical is enabling the next generation of composable, DPU-accelerated cloud infrastructure with NVIDIA. Canonical Kubernetes offers a robust, enterprise-grade platform to install, operate, and scale NVIDIA BlueField-accelerated services using NVIDIA DPF with full automation and long-term support. To get started, visit ubuntu.com/kubernetes for product details and support options, and explore the DPF documentation on GitHub for deployment guides and examples.

If you have any questions about running DPF with Canonical Kubernetes, please stop by our booth #E03 at NVIDIA GTC Paris or contact us.

11 June, 2025 08:21AM by xiaofei

Everyone agrees security matters – yet when it comes to big data analytics with Apache Spark, it’s not just another checkbox. Spark’s open source Java architecture introduces special security concerns that, if neglected, can quietly reveal sensitive information and interrupt vital functions. Unlike standard software, Spark design permits user-provided code to execute with extensive control over cluster resources, thus requiring strong security measures to avoid unapproved access and information leaks.

Securing Spark is key to maintaining enterprise business continuity, safeguarding data in memory as well as at rest, and defending against emerging vulnerabilities unique to distributed, in-memory processing platforms. Unfortunately, securing Spark is far from a trivial task; in this blog we’ll take a closer look at what makes it so challenging, and the steps that enterprises can take to protect their big data platforms.

Closing vulnerabilities in Java applications is very hard. Closing CVEs is fundamental for any software because it is one of the best ways to reduce the risk of being impacted by a cyber attack through known vulnerabilities. However, closing CVEs in Java applications like Spark is uniquely challenging for a number of reasons.

The first issue is the complexity in managing dependencies: a typical Java app may include more than 100 third-party libraries, each with different versions and dependencies. When a vulnerability is found in one library, updating or downgrading it can break compatibility with other dependencies that rely on specific versions, making remediation complex and risky. This tangled nest of dependencies can make some vulnerabilities practically impossible to fix without extensive testing and refactoring.

Apart from this, Java is very verbose and utilized greatly in corporate applications, typically in monolithic architectures of great complexity. Therefore, it is often the case that vulnerabilities affect millions of Java applications all over the world, creating a huge attack surface. The simplicity of exploitation and magnitude of these vulnerabilities make them challenging to eradicate entirely when impacted versions are deeply embedded in many systems. Consequently, developers are typically faced with a massive volume of CVE reports, which is challenging to prioritize and delays remediation.

Research shows that delayed patch updates are a major cause of security breaches in enterprise environments, for example the IBM 2024 Cost of Data Breach report shows that Known Unpatched Vulnerabilities caused $4.33M damage, and the Canonical and IDC 2025 state of software supply chains report indicates that 60% of organizations have only basic or no security controls to safeguard their AI/ML systems. These challenges create significant risks because delays in applying security patches can leave systems exposed to known vulnerabilities, while compatibility issues can force organizations to choose between security and stability and finally widespread vulnerabilities in widely used Java components can compromise millions of applications simultaneously, causing disruptions due to the need of critical fixes needed right away.

Java related challenges have a deep impact on Apache Spark. In the first place, Apache Spark has thousands of dependencies, so it becomes difficult to fix a CVE (both by pathing or bumping the version) because it is easy for the fix to break compatibility. This huge number of dependencies also has an impact on the number and severity of the vulnerabilities. In fact Spark has experienced several critical and high vulnerabilities over the years, which are traceable to its Java origins. In 2022 developers discovered the command injection vulnerability in the Spark UI (CVE-2022-33891) which had a 94.2% of exploitation and was in the top 1% of known exploitable vulnerabilities in recent times, and in 2024 alone two new critical vulnerabilities came out, clearly showing the threat posed by slow patching adoption in Java. These issues are not only a security concern for Spark clusters, but also force companies to make hard choices between implementing the latest security updates and prioritizing stability of their infrastructure.

At Canonical, we believe that robust security should be an integral part of your data analytics platform, not a secondary element – and with Charmed Spark, we aim to address the traditional complexity of securing enterprise Spark deployments.

We maintain a steady release pace of roughly one new version per month, while simultaneously supporting two major.minor version tracks, which as of today are 3.4.x and 3.5.x. This dual-track support ensures stability for existing users while allowing for ongoing feature development and security improvements. In addition, our proactive vulnerability management has led us, in the past year, to close 10 critical CVEs, resolve 84 high-severity vulnerabilities, and address 161 medium-severity vulnerabilities in Spark and its dependencies, extending this focus to related projects such as Hadoop for its dependencies.

By investing in automated, self-service security testing, we accelerate the detection and fixing of vulnerabilities, minimizing downtime and manual intervention. Our comprehensive approach to security includes static code analysis, continuous vulnerability scans, rigorous management processes, and detailed cryptographic documentation, as well as hardening guides to help you deploy Spark with security in mind from day one.

Charmed Spark is a platform where security is a central element, which benefits users by reducing exposure to breaches related to known vulnerabilities through updates and timely fixes, and by giving access to useful tools and documentation for installing and operating Spark in a securely designed manner. In an environment in which Java applications are a frequent focus of attacks and dependency complexity can slow the deployment of patches, Canonical’s approach acts to maintain increased levels of protection from threats, with users able to analyze and use data without inappropriate levels of concern regarding security weakness. This ultimately enables enterprises to focus on their core business application and to provide value to their customers without having to worry about external threats.

While the complexity of Java applications and their extensive dependency ecosystems present ongoing challenges, Charmed Apache Spark gives you a securely designed open source analytics engine without the level of vulnerability challenges that typically come with such a large Java-based project. Moving forward, these foundational security practices will continue to play a vital role in protecting the Spark ecosystem and supporting the broader open source community.

To learn more about securing your Spark operations, watch our webinar.

To learn more about securing your Spark operations, watch our webinar:

There are some new packages available in the BunsenLabs Carbon apt repository:

labbe-icons-bark

labbe-icons-sage

These will be used by default in Carbon.

Also available are:

labbe-icons-grey

labbe-icons-oomox

labbe-icons-telinkrin

And there's a new wallpaper setter:

xwwall

and a gtk GUI builder that xwwall uses:

gtk3dialog

Both of those will be used in Carbon.

All of these packages come thanks to the work of @micko01

![]()

A gentleman by the name of Arif Ali reached out to me on LinkedIn. I won’t share the actual text of the message, but I’ll paraphrase:

“I hope everything is going well with you. I’m applying to be an Ubuntu ‘Per Package Uploader’ for the SOS package, and I was wondering if you could endorse my application.”

Arif, thank you! I have always appreciated our chats, and I truly believe you’re doing great work. I don’t want to interfere with anything by jumping on the wiki, but just know you have my full backing.

“So, who actually lets Arif upload new versions of SOS to Ubuntu, and what is it?”

Great question!

Firstly, I realized that I needed some more info on what SOS is, so I can explain it to you all. On a quick search, this was the first result.

Okay, so genuine question…

Why does the first DuckDuckGo result for “sosreport” point to an article for a release of Red Hat Enterprise Linux that is two versions old? In other words, hey DuckDuckGo, your grass is starting to get long. Or maybe Red Hat? Can’t tell, I give you both the benefit of the doubt, in good faith.

So, I clarified the search and found this. Canonical, you’ve done a great job. Red Hat, you could work on your SEO so I can actually find the RHEL 10 docs quicker, but hey… B+ for effort. ;)

Anyway, let me tell you about Arif. Just from my own experiences.

He’s incredible. He shows love to others, and whenever I would sponsor one of his packages during my time in Ubuntu, he was always incredibly receptive to feedback. I really appreciate the way he reached out to me, as well. That was really kind, and to be honest, I needed it.

As for character, he has my +1. In terms of the members of the DMB (aside from one person who I will not mention by name, who has caused me immense trouble elsewhere), here’s what I’d tell you if you asked me privately…

“It’s just PPU. Arif works on SOS as part of his job. Please, do still grill him. The test, and ensuring people know that they actually need to pass a test to get permissions, that’s pretty important.”

That being said, I think he deserves it.

Good luck, Arif. I wish you well in your meeting. I genuinely hope this helps. :)

And to my friends in Ubuntu, I miss you. Please reach out. I’d be happy to write you a public letter, too. Only if you want. :)

Theodore Roosevelt is someone I have admired for a long time. I especially appreciate what has been coined the Man in the Arena speech.

A specific excerpt comes to mind after reading world news over the last twelve hours:

“It is well if a large proportion of the leaders in any republic, in any democracy, are, as a matter of course, drawn from the classes represented in this audience to-day; but only provided that those classes possess the gifts of sympathy with plain people and of devotion to great ideals. You and those like you have received special advantages; you have all of you had the opportunity for mental training; many of you have had leisure; most of you have had a chance for enjoyment of life far greater than comes to the majority of your fellows. To you and your kind much has been given, and from you much should be expected. Yet there are certain failings against which it is especially incumbent that both men of trained and cultivated intellect, and men of inherited wealth and position should especially guard themselves, because to these failings they are especially liable; and if yielded to, their- your- chances of useful service are at an end. Let the man of learning, the man of lettered leisure, beware of that queer and cheap temptation to pose to himself and to others as a cynic, as the man who has outgrown emotions and beliefs, the man to whom good and evil are as one. The poorest way to face life is to face it with a sneer. There are many men who feel a kind of twister pride in cynicism; there are many who confine themselves to criticism of the way others do what they themselves dare not even attempt. There is no more unhealthy being, no man less worthy of respect, than he who either really holds, or feigns to hold, an attitude of sneering disbelief toward all that is great and lofty, whether in achievement or in that noble effort which, even if it fails, comes to second achievement. A cynical habit of thought and speech, a readiness to criticise work which the critic himself never tries to perform, an intellectual aloofness which will not accept contact with life’s realities — all these are marks, not as the possessor would fain to think, of superiority but of weakness. They mark the men unfit to bear their part painfully in the stern strife of living, who seek, in the affection of contempt for the achievements of others, to hide from others and from themselves in their own weakness. The rôle is easy; there is none easier, save only the rôle of the man who sneers alike at both criticism and performance.”

The riots in LA are seriously concerning to me. If something doesn’t happen soon, this is going to get out of control.

If you are participating in these events, or know someone who is, tell them to calm down. Physical violence is never the answer, no matter your political party.

De-escalate immediately.

Be well. Show love to one another!

My Debian contributions this month were all sponsored by Freexian. Things were a bit quieter than usual, as for the most part I was sticking to things that seemed urgent for the upcoming trixie release.

You can also support my work directly via Liberapay or GitHub Sponsors.

After my appeal for help last month to

debug intermittent sshd crashes, Michel

Casabona helped me put together an environment where I could reproduce it,

which allowed me to track it down to a root

cause and fix it. (I

also found a misuse of

strlcpy affecting at

least glibc-based systems in passing, though I think that was unrelated.)

I worked with Daniel Kahn Gillmor to fix a regression in ssh-agent socket

handling.

I fixed a reproducibility bug depending on whether passwd is installed on

the build system, which would have

affected security updates during the lifetime of trixie.

I backported openssh 1:10.0p1-5 to bookworm-backports.

I issued bookworm and bullseye updates for CVE-2025-32728.

I backported a fix for incorrect output when formatting multiple documents as PDF/PostScript at once.

I added a simple autopkgtest.

I upgraded these packages to new upstream versions:

In bookworm-backports, I updated these packages:

I fixed problems building these packages reproducibly:

I backported fixes for some security vulnerabilities to unstable (since we’re in freeze now so it’s not always appropriate to upgrade to new upstream versions):

I fixed various other build/test failures:

I added non-superficial autopkgtests to these packages:

I packaged python-django-hashids and python-django-pgbulk, needed for new upstream versions of python-django-pgtrigger.

I ported storm to Python 3.14.

I fixed a build failure in apertium-oci-fra.

Back in 2020 I posted about my desk setup at home.

Recently someone in our #remotees channel at work asked about WFH setups and given quite a few things changed in mine, I thought it's time to post an update.

But first, a picture!

(Yes, it's cleaner than usual, how could you tell?!)

(Yes, it's cleaner than usual, how could you tell?!)

It's still the same Flexispot E5B, no change here. After 7 years (I bought mine in 2018) it still works fine. If I'd have to buy a new one, I'd probably get a four-legged one for more stability (they got quite affordable now), but there is no immediate need for that.

It's still the IKEA Volmar. Again, no complaints here.

Now here we finally have some updates!

A Lenovo ThinkPad X1 Carbon Gen 12, Intel Core Ultra 7 165U, 32GB RAM, running Fedora (42 at the moment).

It's connected to a Lenovo ThinkPad Thunderbolt 4 Dock. It just works™.

It's still the P410, but mostly unused these days.

An AOC U2790PQU 27" 4K. I'm running it at 150% scaling, which works quite decently these days (no comparison to when I got it).

As the new monitor didn't want to take the old Dell soundbar, I have upgraded to a pair of Alesis M1Active 330 USB.

They sound good and were not too expensive.

I had to fix the volume control after some time though.

It's still the Logitech C920 Pro.

The built in mic of the C920 is really fine, but to do conference-grade talks (and some podcasts 😅), I decided to get something better.

I got a FIFINE K669B, with a nice arm.

It's not a Shure, for sure, but does the job well and Christian was quite satisfied with the results when we recorded the Debian and Foreman specials of Focus on Linux.

It's still the ThinkPad Compact USB Keyboard with TrackPoint.

I had to print a few fixes and replacement parts for it, but otherwise it's doing great.

Seems Lenovo stopped making those, so I really shouldn't break it any further.

Logitech MX Master 3S. The surface of the old MX Master 2 got very sticky at some point and it had to be replaced.

I'm still terrible at remembering things, so I still write them down in an A5 notepad.

I've also added a (small) whiteboard on the wall right of the desk, mostly used for long term todo lists.

Turns out Xeon-based coasters are super stable, so it lives on!

Yepp, still a thing. Still USB-A because... reasons.

Still the Bose QC25, by now on the third set of ear cushions, but otherwise working great and the odd 15€ cushion replacement does not justify buying anything newer (which would have the same problem after some time, I guess).

I did add a cheap (~10€) Bluetooth-to-Headphonejack dongle, so I can use them with my phone too (shakes fist at modern phones).

And I do use the headphones more in meetings, as the Alesis speakers fill the room more with sound and thus sometimes produce a bit of an echo.

The Bose need AAA batteries, and so do some other gadgets in the house, so there is a technoline BC 700 charger for AA and AAA on my desk these days.

Yepp, I've added an IKEA Tertial and an ALDI "face" light. No, I don't use them much.

I've "built" a KVM switch out of an USB switch, but given I don't use the workstation that often these days, the switch is also mostly unused.

Hey everyone,

Get ready to dust off those virtual cobwebs and crack open a cold one (or a digital one, if you’re in a VM) because uCareSystem 25.05.06 has officially landed! And let me tell you, this release is so good, it’s practically a love letter to your Linux system – especially if that system happens to be chilling out in Windows Subsystem for Linux (WSL).

That’s right, folks, the big news is out: WSL support for uCareSystem has finally landed! We know you’ve been asking, we’ve heard your pleas, and we’ve stopped pretending we didn’t see you waving those “Free WSL” signs.

Now, your WSL instances can enjoy the same tender loving care that uCareSystem provides for your “bare metal” Ubuntu/Debian Linux setups. No more feeling left out, little WSLs! You can now join the cool kids at the digital spa.

Here is a video of it:

But wait, there’s more! (Isn’t there always?) We didn’t just stop at making friends with Windows. We also tackled some pesky gremlins that have been lurking in the shadows:

-k option? Yeah, that’s gone too. We decided it was useless so we had to retire it to a nice, quiet digital farm upstate.So, what are you waiting for? Head over to utappia.org (or wherever you get your uCareSystem goodness) and give your system the pampering it deserves with uCareSystem 25.05.06. Your WSL instance will thank you, probably with a digital high-five.

Download the latest release and give it a spin. As always, feedback is welcome.

Thanks to the following users for their support:

Your involvement helps keep this project alive, evolving, and aligned with real-world needs. Thank you.

Happy maintaining!

As always, I want to express my gratitude for your support over the past 15 years. I have received countless messages from inside and outside Greece about how useful they found the application. I hope you find the new version useful as well.

If you’ve found uCareSystem to be valuable and it has saved you time, consider showing your appreciation with a donation. You can contribute via PayPal or Debit/Credit Card by clicking on the banner.

Once installed, the updates for new versions will be installed along with your regular system updates.

The post uCareSystem 25.05.06: Because Even Your WSL Deserves a Spa Day! appeared first on Utappia.

Welcome to WordPress. This is your first post. Edit or delete it, then start writing!

The Cybersecurity Maturity Model Certification, or CMMC for short, is a security framework for protecting Controlled Unclassified Information (CUI) in non-federal systems and organizations. The CMMC compliance requirements map to the set of controls laid out in the NIST SP 800-171 Rev 2 and NIST SP 800-172 families.

CMMC version 2.0 came into effect on December 26, 2023, and is designed to ensure adherence to rigorous cybersecurity policies and practices within the public sector and amongst wider industry partners.

Whilst many of the controls relate to how organizations conduct their IT operations, there are several specific technology requirements, and Ubuntu Pro includes features which meet these requirements head on.

CMMC has 3 levels, designed to meet increasing levels of security scrutiny:

Most independent contractors and industry partners will use level 2, and perform an annual self-assessment of their security posture against the program requirements.

While the 2.0 standard has been live since December 2023, CMMC will become a contractual requirement after 3 years, which falls in 2026. However, it takes time to work through the controls and achieve the security requirements, and organizations may take anywhere from months to years to gain this level of maturity, depending on their size and agility. Undoubtedly, the best course of action is to start planning now in order to remain eligible for contracts and to keep winning business.

CMMC is based on the NIST SP 800-171 security controls framework for handling Controlled Unclassified Information – similar to FedRAMP – and so anyone familiar with these publications will feel comfortable with the CMMC requirements. Whilst NIST SP 800-171 provides a wide range of security controls, the exact implementation can be left to the user’s discretion; CMMC gives exact requirements and provides a framework for self-assessment and auditing.

In order to become CMMC compliant, you should be systematic in your approach. Here’s how to proceed:

Patching security vulnerabilities

Ubuntu Pro supports the CMMC requirement to remediate software vulnerabilities in a timely manner. Since starting out 20 years ago, Canonical has typically released patches for critical vulnerabilities within 24 hours. We provide 12 years of security patching for all the software applications and infrastructure components within the Ubuntu ecosystem.

FIPS-certified crypto modules

Ubuntu Pro provides FIPS 140-2 and FIPS 140-3 certified cryptographic modules that you can deploy with a single command. These certified modules replace the standard cryptographic libraries which ship with Ubuntu by default, making the system FIPS 140 compliant, and allowing existing applications to make use of FIPS-approved cryptographic algorithms and ciphers without further certification or modification.

System hardening

DISA-STIG is a system hardening guide that describes how to configure an Ubuntu system to be maximally secure, by locking it down and restricting unnecessary privileges. The STIG for Ubuntu lists several hundred individual configuration steps to turn a generic Ubuntu installation into a fully secure environment. System hardening is an important CMMC requirement.

You can simplify STIG hardening with the Ubuntu Security Guide (USG): the USG tool enables automated auditing and remediation of the individual configuration steps in order to comply with the STIG benchmark, and allows you to customize the hardening profile to meet individual deployment needs.

Canonical is a software distributor rather than a service provider, and as such we are not CMMC certified ourselves, but through Ubuntu Pro we provide the tools that enable our customers to meet these specific technology requirements within the baseline controls.

As such, Ubuntu Pro provides an easy pathway to CMMC compliance. It delivers CVE patching for Ubuntu OS and Applications covering 36,000 packages, along with automated, unattended, and restartless updates, and the best tools to secure and manage your Ubuntu infrastructure, developed by the publisher of Ubuntu. Learn more about Ubuntu Pro on our explanatory web page.

06 June, 2025 10:04AM by xiaofei

Bazaar is a distributed revision control system, originally developed by Canonical. It provides similar functionality compared to the now dominant Git.

Bazaar code hosting is an offering from Launchpad to both provide a Bazaar backend for hosting code, but also a web frontend for browsing the code. The frontend is provided by the Loggerhead application on Launchpad.

Bazaar passed its peak a decade ago. Breezy is a fork of Bazaar that has kept a form of Bazaar alive, but the last release of Bazaar was in 2016. Since then the impact has declined, and there are modern replacements like Git.

Just keeping Bazaar running requires a non-trivial amount of development, operations time, and infrastructure resources – all of which could be better used elsewhere.

Launchpad will now begin the process of discontinuing support for Bazaar.

We are aware that the migration of the repositories and updating workflows will take some time, that is why we planned sunsetting in two phases.

Loggerhead, the web frontend, which is used to browse the code in a web browser, will be shut down imminently. Analyzing access logs showed that there are hardly any more requests from legit users, but almost the entire traffic comes from scrapers and other abusers. Sunsetting Loggerhead will not affect the ability to pull, push and merge changes.

From September 1st, 2025, we do not intend to have Bazaar, the code hosting backend, any more. Users need to migrate all repositories from Bazaar to Git between now and this deadline.

The following blog post describes all the necessary steps on how to convert a Bazaar repository hosted on Launchpad to Git.

Our users are extremely important to us. Ubuntu, for instance, has a long history of Bazaar usage, and we will need to work with the Ubuntu Engineering team to find ways to move forward to remove the reliance on the integration with Bazaar for the development of Ubuntu. If you are also using Bazaar and you have a special use case, or you do not see a clear way forward, please reach out to us to discuss your use case and how we can help you.

You can reach us in #launchpad:ubuntu.com on Matrix, or submit a question or send us an e-mail via feedback@launchpad.net.

It is also recommended to join the ongoing discussion at https://discourse.ubuntu.com/t/phasing-out-bazaar-code-hosting/62189.

06 June, 2025 03:04AM by xiaofei

The only ‘Made in America’ smartphone maker has a message for Apple about manufacturing in the Trump tariff era.

The post Fortune.com Features Purism and the Made in America Liberty Phone appeared first on Purism.

05 June, 2025 06:16PM by Purism

Software supply chain security has become a top concern for developers, DevOps engineers, and IT leaders. High-profile breaches and dependency compromises have shown that open source components can introduce risk if not properly vetted and maintained. Although containerization has become commonplace in contemporary development and deployment, it can have drawbacks in terms of reproducibility and security.

There is a dire need for container builds that are not only simple to deploy, but also safe, repeatable, and maintained long-term against new threats – and that’s why Canonical is introducing the Container Build Service.

The use of open source software (OSS) is becoming more and more prevalent in enterprise environments. With analyses showing that it makes up around 70% of all software in use, OSS is no longer considered a supplementary element but rather the foundation of modern applications. What’s even more interesting is that 97% of commercial codebases are reported to have integrated some OSS components, highlighting how fundamental it has truly become. However, we’re also seeing that with this growing use of OSS, open source vulnerabilities are frequently discovered. Research indicates that 84% of codebases contain at least one known open source vulnerability, with almost half of those vulnerabilities being categorized as high-severity. Black Duck’s 2025 Open Source Security and Risk Analysis (OSSRA) report showed that this risk has been increased due to by the sheer number of open source files used by applications -, which have tripled in just four years, this number has nearly tripled, from an average of 5,300 in 2020 to over 16,000 in 2024. This increased attack surface is directly correlated with this rise.

According to a report from Canonical and IDC, organizations are adopting OSS primarily to reduce costs (44%), accelerate development (36%), and increase reliability (31%). Despite nine out of ten organizations expressing a preference to source packages from trusted OS repositories like those in their OS, most still pull directly from upstream registries. This means that the responsibility for patching falls heavily on IT teams. The report found that seven in ten teams dedicate over six hours per week (almost a full working day) to sourcing and applying security updates., and tThe same proportion mandates that high and critical-severity vulnerabilities arebe patched within 24 hours, yet only 41% feel confident they can meet that SLA. What’s also interesting is that more than half of organizations do not automatically upgrade their in-production systems or applications to the newest versions, leaving them exposed to known vulnerabilities.

Supply chain attacks are also becoming more frequent. A study conducted by Sonatype displayed how the number of software supply chain attacks doubled in 2024 alone, and according to a study done by Blackberry over 75% of organizations experienced a supply chain-related attack in the previous year. The Sonatype study also highlighted how malicious packages became highly prevalent in the last 12 months, with more than 500,000 malicious packages being found in public repositories – a 156% increase from the previous year. This highlights how attackers target upstream open source in order to compromise downstream users.

In light of these trends, development teams are seeking ways to ensure the integrity of their container images. Practices like reproducible builds and signed images are gaining popularity as defenses against tampering, while minimal images promise fewer vulnerabilities. However, implementing these measures requires significant effort and expertise. This is where Canonical’s latest offering comes in.

Canonical has launched a new Container Build Service designed to meet the above challenges head-on. In essence, through this service, Canonical’s engineers will custom-build container images for any open source project or stack, with security and longevity as primary features. Whether it’s an open source application or a custom base image containing all the dependencies for your app, Canonical will containerize it according to your specifications and harden the image for production. The resulting container image is delivered in the Open Container Initiative (OCI) format and comes with up to 12 years of security maintenance.

Every package and library in the container – even those not originally in Ubuntu’s repositories – is covered under Canonical’s security maintenance commitment. We have a track record of patching critical vulnerabilities within an average of 24 hours on average, ensuring quick remediation of emerging threats. Unlike standard base images that cover only OS components, Canonical’s service will include all required upstream open source components in the container build. In other words, your entire open source dependency tree is kept safe – even if some parts of it were not packaged in Ubuntu before. This means teams can confidently use the latest frameworks, AI/ML libraries, or niche utilities, knowing Canonical will extend Ubuntu’s famous long-term support to those pieces as well.

Each container image build comes with a guaranteed security updates period of up to 12 years. This far outlasts the typical support window for community container images. It ensures that organizations in regulated or long-lived environments can run containers in production for a decade or more with ongoing patching.

The hardened images are designed to run on any popular Linux host or Kubernetes platform. Whether your infrastructure is Ubuntu, RHEL, VMware, or a public cloud Kubernetes service, Canonical will support these images on that platform. This broad compatibility means you don’t have to be running Ubuntu on the host to benefit: the container images are truly portable and backed by Canonical across environments.

Canonical’s build pipeline emphasizes reproducibility and automation. Once your container image is designed and built, an automated pipeline takes over to continuously rebuild and update the image with the latest security patches. This ensures the image remains up to date over time without manual intervention, and it provides a reproducible build process (verifiable by Canonical) to guarantee that the image you run in production exactly matches the source and binaries that were vetted.

In short, the new Container Build Service delivers secure, reproducible, and highly dependable container images, tailor-made for your applications by the experts behind Ubuntu. It effectively offloads the heavy lifting of container security maintenance to Canonical, so your teams can focus on writing code and deploying features and not constantly chasing the next vulnerability in your container image.

A standout aspect of Canonical’s approach is the use of chiseled Ubuntu container images. Chiseled images are Canonical’s take on the “distroless” container concept – ultra-minimal images that include only the essential runtime components needed by your application and nothing more. By stripping away unnecessary packages, utilities, and metadata, chiseled images dramatically reduce image size and attack surface.

What exactly are chiseled images? They are built using an open source tool called Chisel which effectively sculpts down an application to its bare essentials. A chiseled Ubuntu container image still originates from the Ubuntu base you know, but with all surplus components carved away.

Chiseled images include only the files and libraries strictly required to run your application, excluding surplus distro metadata, shells, package managers, and other tools not needed in production. Because of this minimalist approach, chiseled images are significantly smaller than typical Ubuntu images. This not only means less storage and faster transfer, but also inherently fewer places for vulnerabilities to hide. In a .NET container optimization exercise done by the ACA team at Microsoft, chiseling reduced the Backend API image size from 226 MB to 119 MB, a 56.6% reduction, and slashed CVEs from 25 to just 2 meaning a 92% decrease. Packages also dropped from 451 to 328, offering far fewer potential vulnerabilities to manage.

With less bloat, chiseled containers start up faster and use less memory. They have only the essentials, so pulling images and launching containers is quicker. For example, chiseling the .NET runtime images trimmed about 100 MB from the official image and produced a runtime base as small as 6 MB (compressed) for self-contained apps. Such a tiny footprint translates to faster network transfers and lower memory overhead at scale.

By using chiseled Ubuntu images in its container builds, Canonical ensures that each container is as small and locked down as possible, while still being based on the world’s most popular Linux distribution for developers. It’s a combination that delivers strong security out of the box. And because these images are built on Ubuntu, they inherit Ubuntu’s long-term support policies. Our container images align with Ubuntu LTS release cycles and receive the same five years of free security updates, extended to ten years with Ubuntu Pro, for the core components. In the new build service, that support can stretch to 12 years for enterprise customers, keeping even the minimal runtime components patched against CVEs over the long term.

Canonical coined the term “Long Term Support (LTS)” back in 2006 with Ubuntu 6.06 LTS, pioneering the idea of stable OS releases with 5 years of guaranteed updates. Since then, Ubuntu LTS has become a byword for reliability in enterprises. In 2019, Canonical introduced Ubuntu Pro, which expanded on this foundation by providing comprehensive security maintenance not just for Ubuntu’s core system, but for thousands of community (universe) packages as well, along with enterprise features like FIPS 140 certified cryptography. Today, Ubuntu Pro is a very comprehensive open source security offering, covering over 36,000 packages with 10-year maintenance.

This background matters because the new Container Build Service is essentially Ubuntu Pro for your container images. Canonical is extending its expertise in automated patching, vulnerability remediation, and long-term maintenance to the full stack inside your containers. By having Canonical design and maintain your container image, you’re effectively gaining a dedicated team to watch over your software supply chain. Every upstream project included in your container is continually monitored for security issues. If a new vulnerability emerges in any layer of your stack – whether it’s in the OS, a shared library, or an obscure Python package – Canonical will proactively apply the patch and issue an updated image through the automated pipeline. All of this happens largely behind the scenes, and you receive notifications or can track updates as needed for compliance. It’s a level of diligence that would be costly and difficult to replicate in-house.

Furthermore, Canonical’s involvement provides a chain of custody and trust that is hard to achieve with self-built images. The containers are built and signed by Canonical using the same infrastructure that builds official Ubuntu releases, ensuring integrity. Canonical and its partners have even established a zero-distance supply chain for critical assets – meaning there’s tight integration and verification from source code to the final container artefact. This approach greatly reduces the risk of tampering or hidden malware in the supply chain.

Because Ubuntu is so widely trusted, Canonical’s container images come pre-approved for use in highly regulated environments. Notably, hardened Ubuntu container images are already certified and available in the U.S. Department of Defense’s “Iron Bank” repository, which is a collection of hardened containers for government use. By leveraging Canonical’s service, organizations inherit this level of credibility and compliance. It’s easier to meet standards like FedRAMP, DISA-STIG, or the upcoming EU Cyber Resilience Act when your base images and components are backed by Ubuntu Pro’s security regime and provide auditable evidence of maintenance.

In summary, the Container Build Service stands on the shoulders of Ubuntu Pro and Canonical’s long experience in open source security. Your custom container isn’t just another bespoke image, it becomes an enterprise-grade artifact, with clear maintenance commitments and security SLAs that auditors and IT governance teams will appreciate.

Canonical’s container build service aims to have every layer of the container stack – from OS to app dependencies – maintained. With optimized chiseled sizes, a decade of updates, and Canonical’s support, these images are crafted for production.

Learn more about Canonical’s Container build Service >

Get in touch to discuss securing your container stack today >

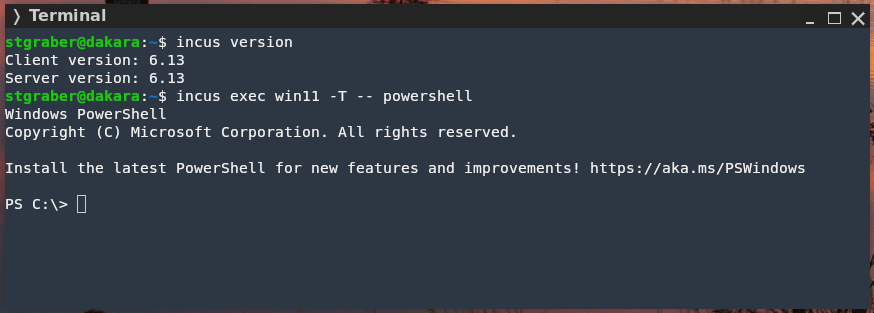

The Incus team is pleased to announce the release of Incus 6.13!

This is a VERY busy release with a lot of new features of all sizes

and for all kinds of different users, so there should be something for

everyone!

The highlights for this release are:

The full announcement and changelog can be found here.

And for those who prefer videos, here’s the release overview video:

You can take the latest release of Incus up for a spin through our online demo service at: https://linuxcontainers.org/incus/try-it/

And as always, my company is offering commercial support on Incus, ranging from by-the-hour support contracts to one-off services on things like initial migration from LXD, review of your deployment to squeeze the most out of Incus or even feature sponsorship. You’ll find all details of that here: https://zabbly.com/incus

Donations towards my work on this and other open source projects is also always appreciated, you can find me on Github Sponsors, Patreon and Ko-fi.

Enjoy!

This blog has been running more or less continuously since mid-nineties. The site has existed in multiple forms, and with different ways to publish. But what’s common is that at almost all points there was a mechanism to publish while on the move.

In the early 2000s we were into adventure motorcycling. To be able to share our adventures, we implemented a way to publish blogs while on the go. The device that enabled this was the Psion Series 5, a handheld computer that was very much a device ahead of its time.

The Psion had a reasonably sized keyboard and a good native word processing app. And battery life good for weeks of usage. Writing while underway was easy. The Psion could use a mobile phone as a modem over an infrared connection, and with that we could upload the documents to a server over FTP.

Server-side, a cron job would grab the new documents, converting them to HTML and adding them to our CMS.

In the early days of GPRS, getting this to work while roaming was quite tricky. But the system served us well for years.

If we wanted to include photos to the stories, we’d have to find an Internet cafe.

For an even more mobile setup, I implemented an SMS-based blogging system. We had an old phone connected to a computer back in the office, and I could write to my blog by simply sending a text. These would automatically end up as a new paragraph in the latest post. If I started the text with NEWPOST, an empty blog post would be created with the rest of that message’s text as the title.

As I got into neogeography, I could also send a NEWPOSITION message. This would update my position on the map, connecting weather metadata to the posts.

As camera phones became available, we wanted to do pictures too. For the Death Monkey rally where we rode minimotorcycles from Helsinki to Gibraltar, we implemented an MMS-based system. With that the entries could include both text and pictures. But for that you needed a gateway, which was really only realistic for an event with sponsors.

A much easier setup than MMS was to slightly come back to the old Psion setup, but instead of word documents, sending email with picture attachments. This was something that the new breed of (pre-iPhone) smartphones were capable of. And by now the roaming question was mostly sorted.

And so my blog included a new “moblog” section. This is where I could share my daily activities as poor-quality pictures. Sort of how people would use Instagram a few years later.

Then there was sort of a long pause in mobile blogging advancements. Modern smartphones, data roaming, and WiFi hotspots had become ubiquitous.

In the meanwhile the blog also got migrated to a Jekyll-based system hosted on AWS. That means the old Midgard-based integrations were off the table.

And I traveled off-the-grid rarely enough that it didn’t make sense to develop a system.

But now that we’re sailing offshore, that has changed. Time for new systems and new ideas. Or maybe just a rehash of the old ones?

Most cruising boats - ours included - now run the Starlink satellite broadband system. This enables full Internet, even in the middle of an ocean, even video calls! With this, we can use normal blogging tools. The usual one for us is GitJournal, which makes it easy to write Jekyll-style Markdown posts and push them to GitHub.

However, Starlink is a complicated, energy-hungry, and fragile system on an offshore boat. The policies might change at any time preventing our way of using it, and also the dishy itself, or the way we power it may fail.

But despite what you’d think, even on a nerdy boat like ours, loss of Internet connectivity is not an emergency. And this is where the old-style mobile blogging mechanisms come handy.

Our backup system to Starlink is the Garmin Inreach. This is a tiny battery-powered device that connects to the Iridium satellite constellation. It allows tracking as well as basic text messaging.

When we head offshore we always enable tracking on the Inreach. This allows both our blog and our friends ashore to follow our progress.

I also made a simple integration where text updates sent to Garmin MapShare get fetched and published on our blog. Right now this is just plain text-based entries, but one could easily implement a command system similar to what I had over SMS back in the day.

One benefit of the Inreach is that we can also take it with us when we go on land adventures. And it’d even enable rudimentary communications if we found ourselves in a liferaft.

The other potential backup for Starlink failures would be to go seriously old-school. It is possible to get email access via a SSB radio and a Pactor (or Vara) modem.

Our boat is already equipped with an isolated aft stay that can be used as an antenna. And with the popularity of Starlink, many cruisers are offloading their old HF radios.

Licensing-wise this system could be used either as a marine HF radio (requiring a Long Range Certificate), or amateur radio. So that part is something I need to work on. Thankfully post-COVID, radio amateur license exams can be done online.

With this setup we could send and receive text-based email. The Airmail application used for this can even do some automatic templating for position reports. We’d then need a mailbox that can receive these mails, and some automation to fetch and publish.

0

0

05 June, 2025 12:00AM by Henri Bergius (henri.bergius@iki.fi)

De regresso triunfal do Oppidum Sena, onde apanharam uma onda de calor e uma barrigada de cabrito, queijo e vinho, os nossos heróis trazem novidades da Wikicon Portugal 2025 e contam-nos as suas aventuras tecnológicas, que incluem despir um Cervantes e apanhar felinos chamados Felicity em sítios estranhos da Internet. Para recebê-los esteve presente a Princesa Leia, a.k.a., Joana Simões, a.k.a. A Senhora dos Anéis, regressada de uma gloriosa missão em Tóquio e de partida para o México - a conversa fará tremer a terra debaixo dos vossos pés e os satélites acima das vossas cabeças!

Já sabem: oiçam, subscrevam e partilhem!

Este episódio foi produzido por Diogo Constantino, Miguel e Tiago Carrondo e editado pelo Senhor Podcast. O website é produzido por Tiago Carrondo e o código aberto está licenciado nos termos da Licença MIT. (https://creativecommons.org/licenses/by/4.0/). A música do genérico é: “Won’t see it comin’ (Feat Aequality & N’sorte d’autruche)”, por Alpha Hydrae e está licenciada nos termos da CC0 1.0 Universal License. Este episódio e a imagem utilizada estão licenciados nos termos da licença: Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0), cujo texto integral pode ser lido aqui. Estamos abertos a licenciar para permitir outros tipos de utilização, contactem-nos para validação e autorização. A arte de episódio foi criada por encomenda pela Shizamura - artista, ilustradora e autora de BD. Podem ficar a conhecer melhor a Shizamura na Ciberlândia e no seu sítio web.

If you’re looking for a low-power, always-on solution for streaming your personal media library, the Raspberry Pi makes a great Plex server. It’s compact, quiet, affordable, and perfect for handling basic media streaming—especially for home use.

In this post, I’ll guide you through setting up Plex Media Server on a Raspberry Pi, using Raspberry Pi OS (Lite or Full) or Debian-based distros like Ubuntu Server.

What You’ll Need

What You’ll Need Step 1: Prepare the Raspberry Pi

Step 1: Prepare the Raspberry Pisudo apt update && sudo apt upgrade -y

Step 2: Install Plex Media Server

Step 2: Install Plex Media ServerPlex is available for ARM-based devices via their official repository.

curl https://downloads.plex.tv/plex-keys/PlexSign.key | sudo apt-key add -

echo deb https://downloads.plex.tv/repo/deb public main | sudo tee /etc/apt/sources.list.d/plexmediaserver.list

sudo apt update

sudo apt install plexmediaserver -y

Step 3: Enable and Start the Service

Step 3: Enable and Start the ServiceEnable Plex on boot and start the service:

sudo systemctl enable plexmediaserver

sudo systemctl start plexmediaserver

Make sure it’s running:

sudo systemctl status plexmediaserver

Step 4: Access Plex Web Interface

Step 4: Access Plex Web InterfaceOpen your browser and go to:

http://<your-pi-ip>:32400/web

Log in with your Plex account and begin the setup wizard.

Step 5: Add Your Media Library

Step 5: Add Your Media LibraryPlug in your external HDD or mount a network share, then:

sudo mkdir -p /mnt/media

sudo mount /dev/sda1 /mnt/media

Make sure Plex can access it:

sudo chown -R plex:plex /mnt/media

Add the media folder during the Plex setup under Library > Add Library.

Optional Tips

Optional Tips Secure Your Server

Secure Your Server32400 only if you want remote access. Conclusion

ConclusionA Raspberry Pi might not replace a full-blown NAS or dedicated server, but for personal use or as a secondary Plex node, it’s surprisingly capable. With low energy usage and silent operation, it’s the perfect DIY home media solution.

If you’re running other services like Pi-hole or Home Assistant, the Pi can multitask well — just avoid overloading it with too much transcoding.

The post Building a Plex Media Server with Raspberry Pi appeared first on Hamradio.my - Amateur Radio, Tech Insights and Product Reviews by 9M2PJU.